What is Database Delegation / Push Down Optimization?

In large-scale Data Warehouse deployments, the performance of reports is critical for users of the system. Designers and Developers have several tricks under their belt, among which one popular approach is ‘Database Delegation’, and at times, this term is also referred to as ‘Push down Optimization’. BI tools in the market today employ different methods to achieve the same end result.

One primary motivation to adopt this approach is that hardware installed at the database and application layer(s) are high-performance systems. From a high level, tasks that are computationally intensive or efficient to be done in a downstream system are handed over i.e., ‘delegated’ to the preceding layer, and only results are sent back to requesting layer.

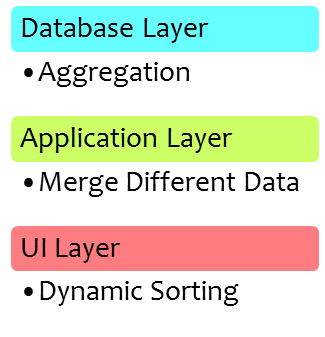

Aggregating data is a good example, where the database layer performs this task in much more efficient manner than other layers. In case of merging data from two different data sources, the application layer is best equipped to handle the task, whereas if the user is performing actions such as sorting and ranking in ad-hoc manner, the UI layer is the right place to handle such tasks.

layers. In case of merging data from two different data sources, the application layer is best equipped to handle the task, whereas if the user is performing actions such as sorting and ranking in ad-hoc manner, the UI layer is the right place to handle such tasks.

Design Considerations:

Database delegation relies heavily on Data Warehouse Modelling techniques that can be implemented during the design phase. Ample forethought is required to design the schema and data containers that will store and process efficiently. Extreme push-down optimization keeps this aspect in the forefront and the entire architecture of data flow-related data capture, storage, and reporting is built around it. All Data Warehouse Environments (DWE) have implemented this concept in some form or other, but the extent of scope varies to a high degree. Some points to remember are:

- Volume of data that is transferred from one layer to another reduces considerably

- Highly efficient usage of resources at all levels

- Not all measures can be delegated directly, and some degree of computation is required within ‘UI Layer’

- Business rules might pose hurdles in design and architecture.

- Bad optimization will lead to a high degree of data transfer, affecting both network and system performance.

- Dependence on a high-speed bandwidth network i.e., an always-connected environment

- Changing ‘live’ objects require a high degree of effort

Performance Improvement activities in a DWE always is an ongoing effort that is done periodically. Database Delegation / Push Down Optimization is predominantly a data model design-based approach that can be adopted for DWE performance.

In some cases, certain actions must be delegated to the database. Here is an example. Consider a customer has ordered two products as follows

Product A - Quantity 10 - Price - 100

Product B - Quantity 100 - Price 10

If the data is aggregated and the value is computed in the front end for each customer, it will show 12100 (110 * 110). which is wrong. The correct value is 2000 (1000 + 1000).