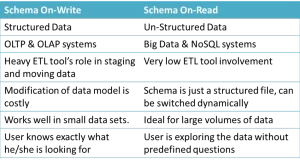

As a parallel concept to Schema On-Read and Schema On-Write, one has to first understand the difference between structured and unstructured data. These terms became a part of common parlance after big data tools (Hadoop, Map Reduce, etc.) exploded in the scene. Fundamentally, we are trying to differentiate two diametrically opposite methodologies of storing, manipulating, and analyzing large volumes of data.

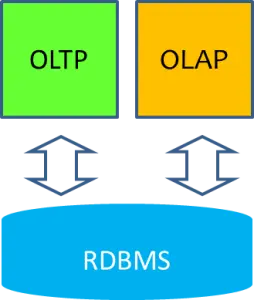

In the evolution of data storage methods used by applications, one can count three distinct phases. The concept of schema was introduced by Relational Database Management Systems (RDBMS). The need for a clearly defined schema along with normalization of data stemmed from transaction processing applications. RDBMS systems ensured that transactions were ACID (Atomicity, Consistency, Isolation, Durability) compliant, which enabled very fast adoption by commercial organizations. (There are other database architectures such as Object Databases, Hierarchy Databases, etc., but none of the architectures have such a widespread adoption akin to Relational Databases)

After collecting vast amounts of data, the organization realized that the traditional transaction processing systems had several bottlenecks when it came to analytical needs. A new field called Data Warehousing & Business Intelligence was born that addressed such concerns by building Online Analytical Processing (OLAP) systems using Relational Databases. The key thing to note here is that the underlying data management architecture remained the same.

Schema On-Write

This was the standard practice where the data warehouse model is defined well in advance. ETL tools performed the bulk of work pertaining to fetching, cleaning, and applying business rules and ensured that data is written to tables exactly as per the predefined structure.  Adopting a schema-on-write approach ensured that all data wrangling is done well in advance and final data that is available for analysts are clean and formatted. Essentially Schema On-Write concept enforced structure on incoming data into the warehouse even if the source is providing unstructured or semi-structured data.

Adopting a schema-on-write approach ensured that all data wrangling is done well in advance and final data that is available for analysts are clean and formatted. Essentially Schema On-Write concept enforced structure on incoming data into the warehouse even if the source is providing unstructured or semi-structured data.

Schema On-Read

This methodology basically eliminates the ETL layer altogether and keeps the data from the source in the original structure. Basically, entire data is dumped in the data store, where the system manages storing data across the entire landscape. All Big Data and NoSQL tools follow this approach and just split the files across all processing nodes. During information retrieval, two elements are required i.e., Schema Definition & Processing Logic. When a user wants to analyze the data, he/she creates a schema that is fitted to the data at run-time and processing logic performs the data manipulation part with reference to the defined.

Each approach targets different types of analytical scenarios. In today’s context, DW/BI implementations feature both methodologies and both types of systems co-exist.